First introduced in June and officially launched on December 6 at the Advancing AI event in San Jose, California, Instinct MI300X targets AI training systems. During the event, AMD named Nvidia’s chip model as its main competitor.

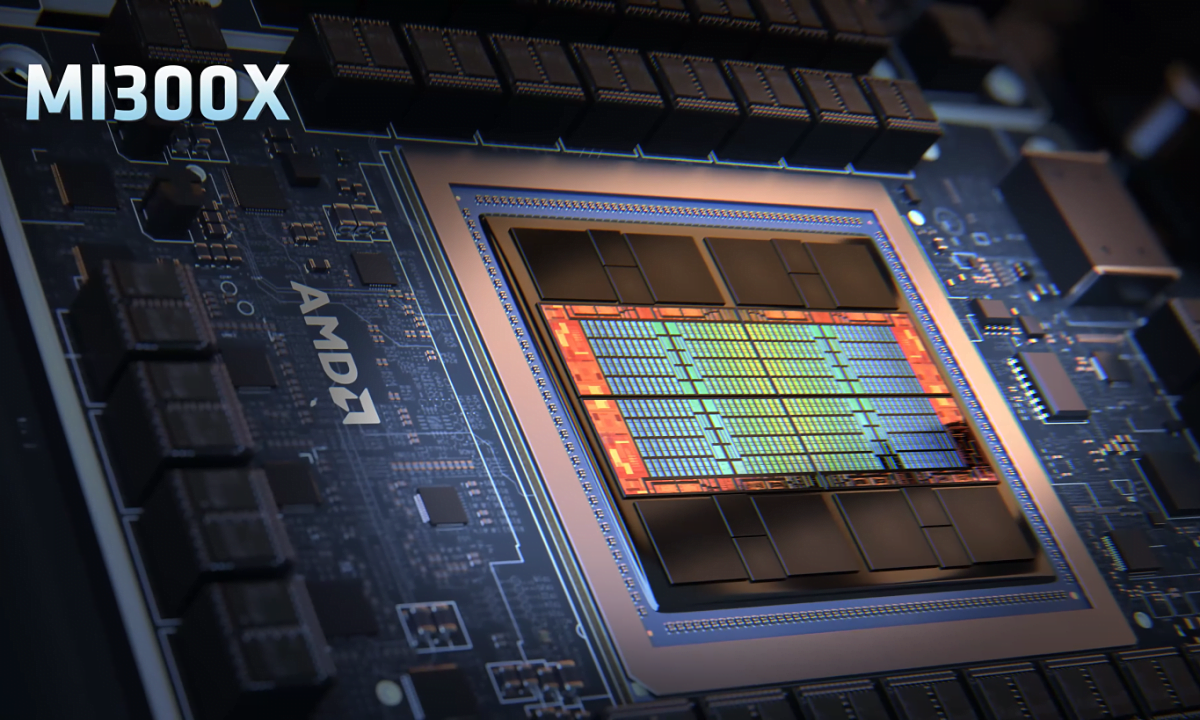

MI300X is considered the pinnacle of AMD’s latest chiplet design approach for graphics processing units (GPUs), combining eight HBM3 12Hi memory stacks with eight stacked 5nm CDNA 3 GPU chiplets. 3D, named XCD. This hybrid technology is called 3.5D by AMD, with a combination of 3D and 2.5D. The result is a new chip with a capacity of 750 W, using 304 computing units, HBM3 capacity of 192 GB and reaching a bandwidth of 5.3 TB/s.

Inside the MI300X is designed to operate in groups with a total of 8 separate processing partitions. Through the Infinity Fabric connection, these zones will communicate with each other at a bandwidth of 896 GB/s. The whole thing delivers a performance of 10.4 Petaflops (million billion operations per second).

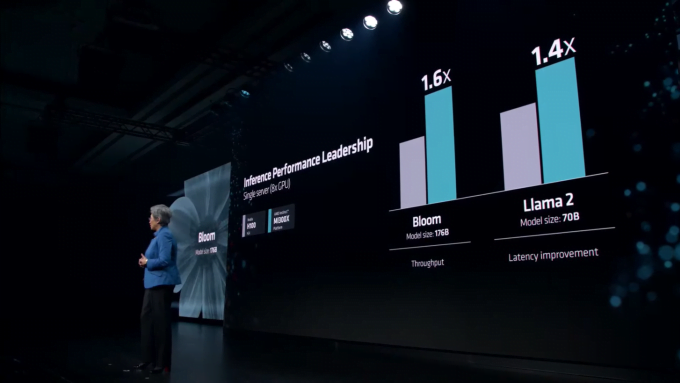

AMD CEO Lisa Su announces the specifications of the MI300X and compares it with the Nvidia H100 on top LLM models. Photo: Wccftech

According to AMD’s announcement, MI300X has 2.4 times larger memory capacity and 1.3 times more computing power advantage compared to the H100 platform – an AI chip commonly used in AI applications. recently created, including OpenAI’s GPT-4. Large memory capacity will help increase computing performance by processing more data at the same time.

When tested with large language models, the MI300X outperformed the competition from Nvidia. With Llama 2 70B (70 billion parameters) and FlashAttention 2, the AMD chip is 20% faster in a 1v1 comparison. When combined with 8v8 (8v8 Server), MI300X is 40% more powerful than H100 when running on Llama 2 70B and up to 60% when running on Bloom 176B (176 billion parameters).

AMD Instinct MI300X chip. Photo: AMD

AMD emphasizes that the MI300X is on par with the H100 in terms of training performance, but is more competitively priced when comparing the same workload. However, the company has not announced the official price of the product.

Despite its strong specifications, MI300X is not the most powerful GPU. In mid-November, Nvidia announced its latest H200 with nearly double the performance of the H100 .

Besides Instinct MI300X, AMD also introduced Instinct MI300A, the world’s first data center APU (Accelerated Processing Unit) processor. This chip also uses the 3.5D packaging technique implemented by TSMC similar to MI300X, combining two 5 nm and 6 nm processes with 13 stacked chiplets, creating a chip with 24 cores containing 53 billion transistors. This is also the largest chip AMD has ever produced. The company has not announced product prices.

“All the major attention is focused on AI chips,” AMD CEO Lisa Su said. “What our chips deliver will directly translate into a better user experience. When you ask something, the answer will appear faster, especially as the answers become more complex “.

Meanwhile, Meta and Microsoft said they will buy Instinct MI300X to replace Nvidia chips. Meta representatives said they will equip MI300X for artificial intelligence inference systems such as AI sticker processing, image editing and virtual assistant operation. And Kevin Scott, CTO of Microsoft, said he would use AMD chips for Azure cloud servers. OpenAI will also use the MI300X for a software processing system called Triton, a model used in AI research.

AMD predicts its total data center GPU revenue will reach about $2 billion in 2024. The company also estimates the total AI GPU market value could grow to $400 billion in the next four years. “Nvidia will take a big piece of the pie, but we will also get a fair share,” Ms. Su added.

Bao Lam (according to Wccftech, Toms Hardware )

Leave a Reply

You must be logged in to post a comment.