Business Insider cites statistics showing that the number of AI applications and websites providing tools to “undress” women is on the rise. Users just need to upload a normal photo of a person, the AI will return a nude photo of that person in a few seconds.

According to social media analytics company Graphika, a group of 34 websites that allow “clothing removal” using AI attracted 24 million visits in September. Data from Sameweb also shows that, on social networks, like X and Reddit, content containing referral links to these websites and applications has increased more than 2,000% from the beginning of the year until now.

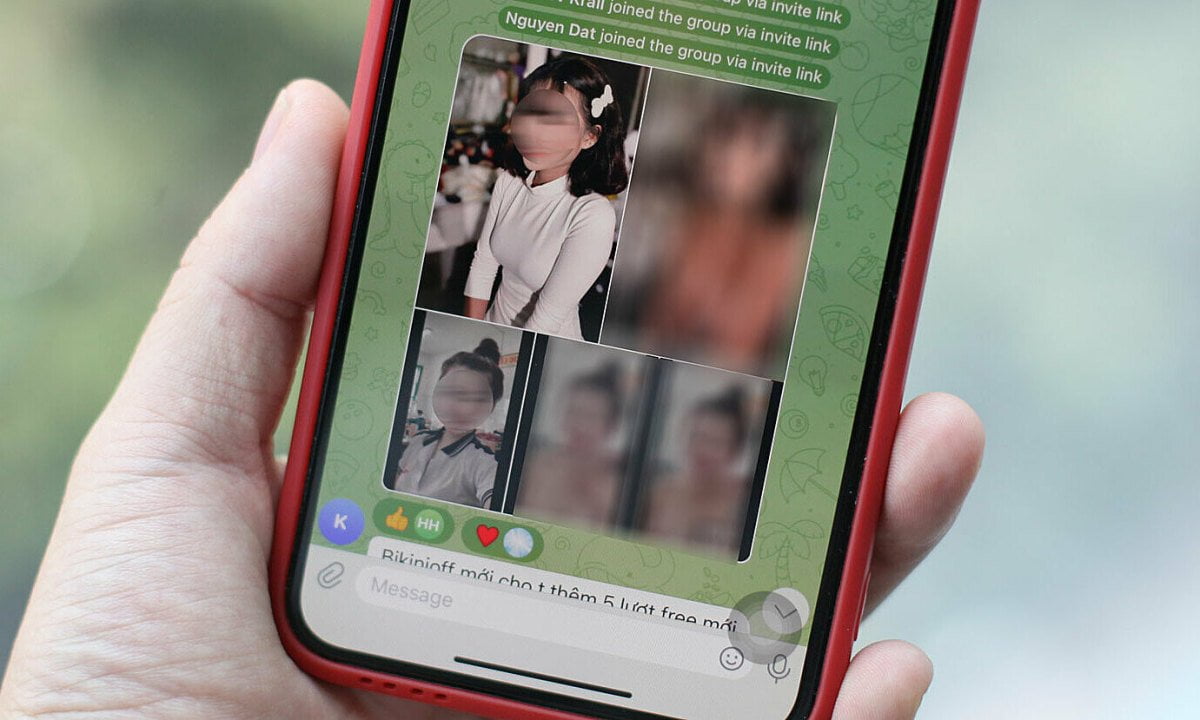

A group specializing in providing pornographic deepfake photo services on Telegram, attracting thousands of participants. Photo: Khuong Nha

“The popularity of ‘undressing’ services using AI is increasing. The ability to reach large users leads to other risks of online harm such as creating and distributing nude photos without the consent of the user.” in the photo,” the Sameweb research team said.

According to experts, deepfake nude photos and videos appeared many years ago, but on a small scale due to technical limitations and machine configuration. However, as the AI craze explodes, chatbots are increasingly easy to use, causing this content to flood social networks. For example, in September, fake nude photos of more than 20 female students spread in several Spanish schools, shocking the community. According to El País, the photos are processed by an AI application.

Instagram, TikTok, YouTube, Twitter and Facebook have “prevention of misleading information” policies. However, most cannot be controlled due to the large volume of deepfake content. Meanwhile, the law does not have specific sanctions for fake pornographic photos.

Super AI developers like OpenAI also announced the removal of pornographic content from the data used to train the Dall-E painting AI. Midjourney also blocks keywords related to porn, encouraging users to report if they see sensitive content. Startup Stability AI also updated its software in November 2022 to remove the ability to create obscene images on Stable Diffusion. However, users always have ways to circumvent the law and create offensive photos.

According to professor Cailin O’Connor at the University of California, deepfake can be just as harmful as real images. “Even though they are fake, the impression planted in the viewer’s head still exists. Victims of deepfake always have to receive curious, mocking and disrespectful looks,” the professor said.

Khuong Nha (according to Business Insider )

Leave a Reply

You must be logged in to post a comment.